“If you’re just using your engineers to code, you’re only getting about half their value”

Marty Cagan (Founder, Partner SVPG)

Developers are not "feature factory" workers

In the first blog Pitfalls of Stack Ranking Software Engineers I pointed out that it is very easy to place the wrong emphasis when stack ranking software developers. Since software development is more like a team sport, both aspects, the independent/sole code contributions of a developer, must be set in relation to the joint work on the code in teams of two. This is because only the second aspect contributes to knowledge sharing, team alignment and team resilience.

There is another aspect that needs to be considered. In the previous blog, we saw how a developer's effort can be split into an "Effort on Knowledge Islands %" and an "Effort on Knowledge Balances %" (and a remaining effort with high "Contributor Friction"). In the blog Positive Impact of Developer Contributions [1] on knowledge balances, we saw that the code behind knowledge balances is often only affected by bugs to a small extent. This is in the nature of things when working in teams of two, whose members constantly exchange information and review each other's work.

But what about the software quality of the code behind knowledge islands, which are the result of major solo work by developers? Obviously, a developer can implement many features "quick and dirty" by hook or by crook, but this later results in bugs that would not necessarily be attributed to them alone.

The following aspects should therefore also be checked, especially for extensive knowledge islands:

- How extensive is the test code, test coverage or other test values?

- Are findings found by code quality tools that are urgently recommended to be fixed (e.g. hidden bugs)?

- What is the design or architecture quality in these code areas? Is this code maintainable/comprehensible and extensible with new features?

In other words: has the developer not only made a big contribution on his own, but also a good one?

Is the delivered quality also right?

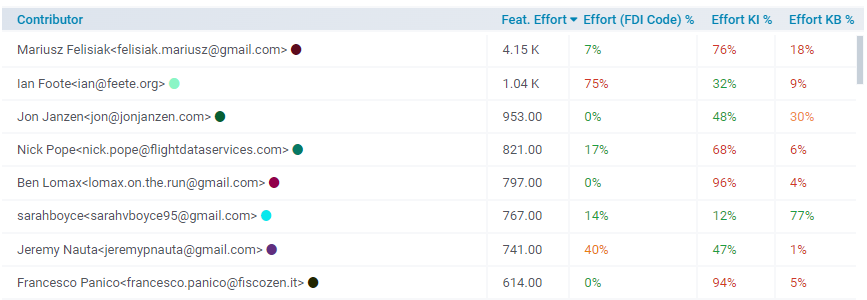

Again, as in the previous blog, we refer to the developers of the Django open source web framework in 2023 in the table below. The first column ("Feat. Effort") shows the effort that the respective developer has contributed to the development of additional features. The criteria "Effort Knowledge Islands %" and "Effort Knowledge Balances %", which we already discussed in the first blog, measure the effort distribution of the respective developer in solo and two-team work.

Another (second column) is the "Effort (FDI Code) %" metric. It represents the proportion of effort that has led to code (or source code files) with a high "Feature Debt Index" (FDI). "Feature Debt" measures, among other things, the architecture coupling in the code between features that were captured in the issue tracker. (More detailed information on the measurement of the "FDI" and the "Feature Effort" metrics can be found here).

Table: “Top Performer” criterion - “Effort (FDI Code) %” measures the architectural quality

According to the criteria from the first blog, we would have categorised Mariusz Felisiak as a "Top Performer" even though he has a too high "Effort Knowledge Islands %" of 76%. If we refer to the broader aspect of software quality, we see that his effort only resulted in 7% source files with a high "Feature Debt" index value. This is a indicator that the design quality of his sole work is good too.

We have also categorised Nick Pope, Ben Lomax and Francesco Panico (fourth, fifth and eighth entries) as "Achievers" in the first blog on the topic of stack ranking. Their "Effort (FDI Code) %" values are an acceptable 17% (below 20%) and 0% respectively. The same applies to Jon Janzen, who we have also previously categorised as a "Top Performer", which means that despite this new aspect, the previous rating of these developers remains positive.

Ian Foote and Jeremy Nauta (second and seventh entries) stand out with very high "Effort (FDI Code) %" of 75% and 40%. Their "Effort Knowledge Islands %" shares of 32% and 47% are probably okay, but they have extremely low "Effort Knowledge Balances %" shares of 9% and 1%, so that the effort of the two, independent of KIs and KBs, is 59% and 52% respectively. This remaining effort relates to code areas on which at least three developers worked in the same period.

In the blog Rethinking Collective Code Ownership (section "Collective Code Ownership Considered Harmful") [2], we used an example to show that it is precisely these code areas that are increasingly affected by bugs and maintenance. Therefore, the high "Effort (FDI Code) %" cannot be attributed to these two developers. On the contrary, working on this code was probably rather difficult cognitively, as contributions from several developers had to be taken into account that could not be "damaged".

These areas of code generally require architectural refactoring or restructuring, which should be discussed in the team, and should not be included negatively in the evaluation of individual developers. How to deal with a high number of such code areas in general and how the handling of code improvements could be included in a developer's evaluation would be worth another blog.

Counterexample

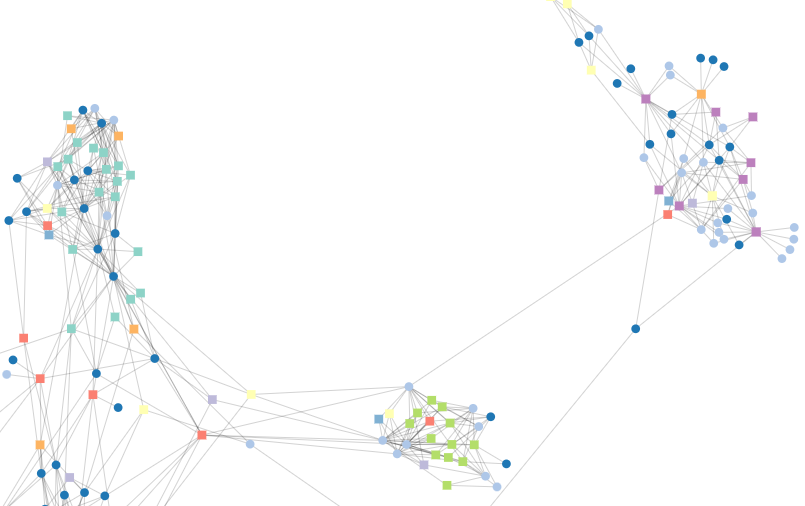

The following is an anonymised example that shows how a very high "Effort Knowledge Islands %" percentage can also be very misleading. In the figure below, we see a code example with very high feature coupling between the source files. The rectangles represent source files, the blue circles represent feature tickets from the issue tracker and a connection between source file and feature means that the source file was changed due to the implementation of the feature.

Three larger clusters with a high feature coupling become visible: top left, top right and bottom centre. In each cluster, many files are changed in a chaotic way by many features at the same time. The probability of unintended side effects (such as the breaking of existing functionality, the introduction of bugs) during further processing of these files is very high. Overall, the architectural quality of these code areas is in need of improvement and "detangling" the code in these files would be appropriate.

Figure 1: Three source file clusters with high feature coupling

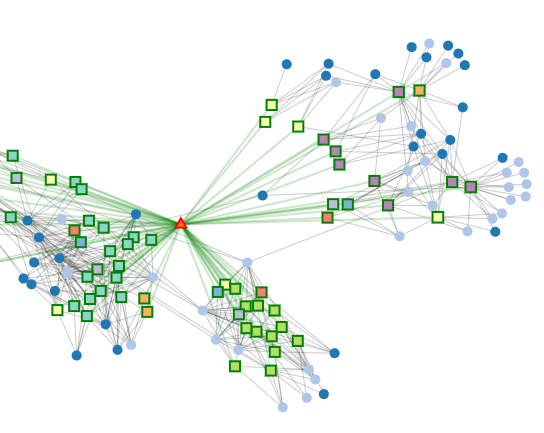

In Figure 2 below, we show the developers (small triangles) who have worked on these files. The green connections mark the source code files that the developer marked in red has worked on.

Figure 2: Code clusters with high feature coupling and one developer

We can now see that these three clusters can be traced back to one and the same developer. This developer is extremely productive, plays a very major part in the development and implemented many features. But the architectural quality of the code will almost certainly lead to increased effort in further development and bugs.

Summary

Stack ranking is on the rise again, see the reports on SAP at the end of 2023. In both blogs, we have highlighted important, if not the most important, pitfalls in the stack ranking of software developers. We have also explained how stack ranking can be expanded to include new criteria (competitive AND collaborative aspects, software quality) in order to avoid these pitfalls. From our point of view, others could be added: measuring the work on technical improvements and their positive effects or determining team healthiness or the risk of burnout among developers.

Links

[0] Photo by Pixels (JAVIER PIVAS FLOS)

[1] Positive Impact of Developer Contributions

[2] Rethinking (Collective) Code Ownership (section “Collective Code Ownership Considered Harmful”)